Editorial for CEOI '17 P5 - Palindromic Partitions

Submitting an official solution before solving the problem yourself is a bannable offence.

Let us think about the problem of creating an optimal (i.e. longest) chunking in terms of an iterative process of chipping off equal-sized chunks from both sides of the input string .

Exhaustive search. How large should these chipped-off prefixes and suffixes be at each step? Naively, we can try chipping off every possible length , then recursively figure out the optimal chunking for the remainder:

The expression above uses Python-like notation: is the prefix of length

of

,

is the suffix, and

is everything but the prefix and suffix.

Dynamic programming. This solution takes time exponential in the length of the string, and will only solve the easiest set of inputs. However, note that the input to is fully defined just by its length. It follows that there are only

possible different calls to

, and also that we can pass that length, rather than the actual substring of

, to

. If we memoize the function outputs, this results in an

runtime. This (or the equivalent dynamic programming solution without memoization) solves the first two sets of inputs.

Greedy. We do not have to consider all values of , however. A greedy approach, always chipping off the smallest possible chunk, gives optimal results. Let us sketch the proof (in the interest of space, notation is not fully formal).

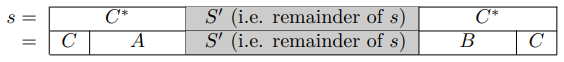

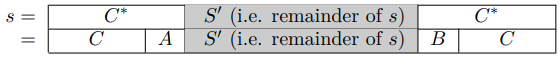

Without loss of generality, assume that the greedy algorithm and the optimal algorithm differ in the first chunk they chip off the input string : the greedy algorithm selects a chunk

, and the optimal algorithm selects a strictly larger chunk

,

. Let us denote the prefix/suffix as

and

:

We consider two cases:

.

Equating

gives us

, where

is possibly an empty string. So chunking the prefix/suffix

as

yields a longer palindrome than the "optimal" chunking of

. Contradiction.

.

Equating

and taking into account

gives us

for some prefix

of

; note that

is both a prefix and a suffix of

. We can keep applying the same logic to get

, until

.

is both a prefix and a suffix of

(by the same logic that

was), and thus of

. This prefix and suffix do not overlap, because

. So

can be written as

for some

, and could be chunked more finely. Contradiction as in case 1.

Since both cases lead to a contradiction, we conclude that the greedy solution is optimal.

A straightforward implementation of the greedy approach solves the first three sets of inputs. It still takes time because the string equality test (to determine whether a chunk of size

can be chipped off) takes

.

Rolling hashes. As a final optimization step, we can speed up string comparison using rolling hashes. Let us denote the ASCII value of a character with

, and choose a prime

larger than

, e.g.

. We can then define the hash of a sequence of characters

as

, where

is another suitably large prime. As we grow our candidate prefix and suffix, looking for the first matching pair, we only perform the expensive string comparison when the hash values of the prefix and the suffix match. These hash values can be updated in

for each additional character, and strings can be compared in approximately

(neglecting the cases where hash comparison yields a false positive), thus roughly

for the input overall.

Deterministic time complexity. A greedy solution with naive string equality testing is not that bad as long as the chunks are small enough. On the other hand, we could process the entire remaining string to find the smallest chunk in . We can use the z-algorithm or KMP's failure function for this purpose. But that would again waste a lot of time if the smallest chunk turns out to be small. However, we can make a compromise. Choose a length threshold

and use naive equality testing for

. If that doesn't yield a solution, process the entire string. Worst case time complexity is

. Choosing

gives an

solution.

A deterministic linear-time solution also exists, although we are not aware of one that is practical to implement in a contest. Let us build a suffix array and store for every suffix

its index

in the suffix array. Assume that we have already chipped off

characters on each side of

, and that we are trying to determine if

is a good size for the next prefix and suffix; in other words, if

. The size of a chunk

defines a range

of relevant suffixes in the suffix array and

is a good size exactly when

. The bottleneck of this solution is in adjusting the bounds of the range

and

when increasing

. Building a suffix tree to traverse and determine bounds

and

instead of binary searching over a suffix array leads to a linear-time solution.

Comments